ImageNet Auto-annotation

Overview

ImageNet is a large-scale, hierarchical dataset [1] with thousands of classes. Although the dataset contains over 14 million images, only a fraction of them has bounding-box annotations (~10%) and none have segmentations (object outlines).

We provide here the results of our large-scale knowledge transfer mechanisms to localize and segment objects, applied to a subset of about 500K images of the ImageNet. This means you can find here 500K bounding-boxes and segmentations for more than 500 object classes. These bounding-boxes and segmentations can be further used to train object detectors, shape models, edge detectors and other models of visual world that require labelled objects for training.

Bounding-boxes for objects with self-assessment

For auto-annotations to be used as training data for other tasks, it is important that they are of high quality. Otherwise they will lead to models fit to their errors. In [5] we produce bounding-boxes with a self-assessment score, i.e. estimation of their quality. This allows us to return only accurate boxes, automatically discarding the rest. You can download the bounding boxes and their self-assessment scores produced by our method AE-GP using "family" as source (see [5] sec. 2). Using self-assessment score you can, for example, automatically select bounding-boxes from 30% of images with highest localization accuracy (i.e.~73% average overlap with ground-truth for our AE-GP method).

Below you see a demo of AE-GP method with Convolutional Neural Network (CNN) features, which gives even better results (~79% average overlap for 30% of images with highest localization accuracy):

Image area to box size ratio < [0.31]

and predicted overlap > [00.9]

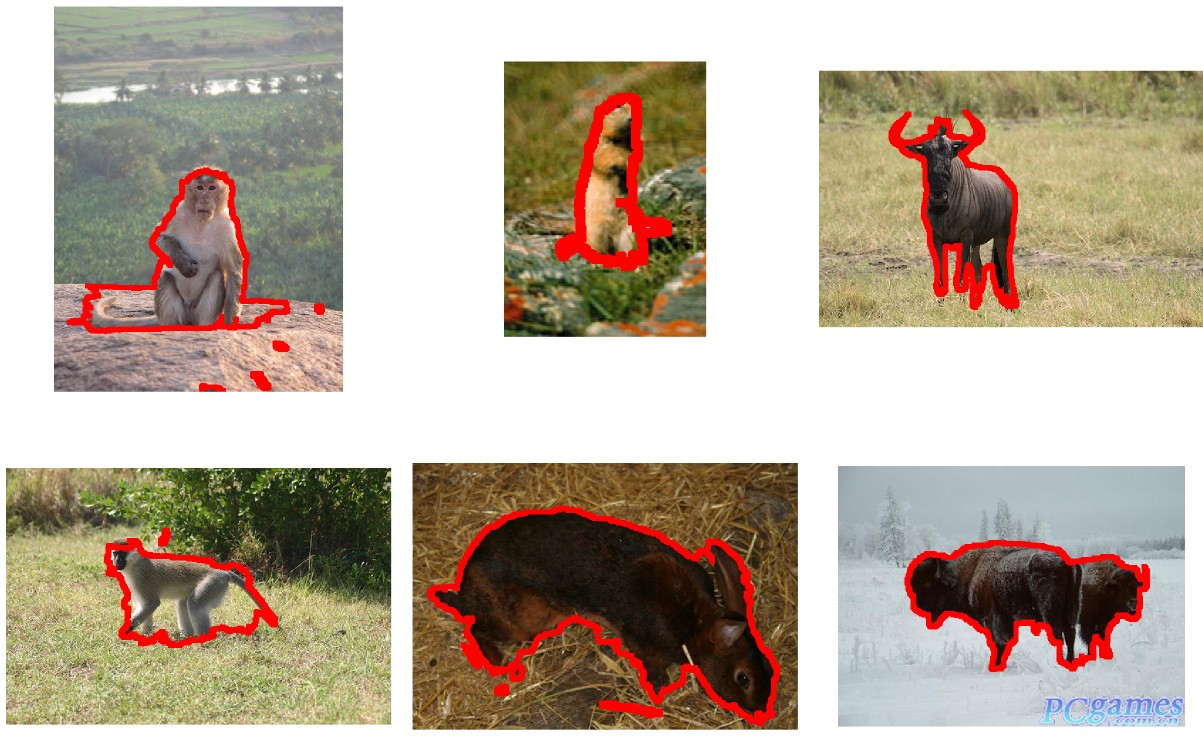

Segmentations for objects

Here we provide pixel-level annotations for every image in the same set of 500K images obtained by [3,4]. The key idea in [3,4] is to recursively exploit images segmented so far to guide the segmentation of new images. At each stage this propagation process expands into the images which are easiest to segment at that point in time, e.g. by moving to the semantically most related classes to those segmented so far.

The evaluation of our approach on 4460 manual segmentations from Amazon Mechanical Turk (available below), shows that we achieve per-pixel accuracy of 84.4% and mean IoU of 57.3. This method does not provide self-assessment - there is no quality score associated with the output.

Downloads

| Description | File size | Link |

|---|---|---|

| Half a million bounding-boxes with self-assessment score [5]. | 42 MB | Boxes, Readme | NEW! Segmentations V2 from 2013/11/29. These segmentations correspond to the system described in the tech report [4] using superpixels and accelerated EM, and correct an important bug from the previous version (which are thus deprecated). | 780 MB | Segmentations |

| 4276 images with ground-truth segmentations (automatically collected from AMT, manually cleaned-up after ECCV12) | 1.2 Gb | Ground-truth |

| The 68K ground-truth boxes we have used from ImageNet | 1.5 MB | Ground-truth boxes |

| Here you can download the results of our earlier approach [2] and Matlab code to load them. It lacks self-assessment and is less accurate. | 10.0 MB | Boxes, Code |

| Code for computing objectness windows | Project page |

References

- ImageNet: A Large-Scale Hierarchical Image Database

,

In IEEE Conference on Computer Vision & Pattern Recognition (CVPR), 2009.

![url [url]](../icons/url.png)

- Large-scale knowledge transfer for object localization in ImageNet

,

In IEEE Conference on Computer Vision & Pattern Recognition (CVPR), 2012.

![pdf [pdf]](../icons/pdf.png)

![url [url]](../icons/url.png)

![cites [cites]](../icons/google_scholar.png)

- Segmentation Propagation in ImageNet

,

In European Conference on Computer Vision (ECCV), 2012. (oral, best paper award)

![pdf [pdf]](../icons/pdf.png)

![url [url]](../icons/url.png)

![cites [cites]](../icons/google_scholar.png)

-

ImageNet Auto-annotation with Segmentation Propagation

,

In International Journal of Computer Vision (IJCV), 2015, to appear.

![pdf [pdf]](../icons/pdf.png)

![url [url]](../icons/url.png)

- Associative embeddings for large-scale knowledge transfer with self-assessment

,

In IEEE Conference on Computer Vision & Pattern Recognition (CVPR), 2014.

![pdf [pdf]](../icons/pdf.png)

![url [url]](../icons/url.png)

- Measuring the objectness of image windows

,

In IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2012.

![pdf [pdf]](../icons/pdf.png)

![url [url]](../icons/url.png)

-

Figure-ground segmentation by transferring window masks

,

In IEEE Conference on Computer Vision & Pattern Recognition (CVPR), 2012.

![pdf [pdf]](../icons/pdf.png)

![url [url]](../icons/url.png)

-

GrabCut: interactive foreground extraction using iterated graph cuts

,

In ACM Transactions on Graphics (SIGGRAPH), volume 23, 2004.

![doi [doi]](../icons/doi.png)