Luca Del Pero, Susanna Ricco, Rahul Sukthankar, Vittorio Ferrari

University of Edinburgh (CALVIN), Google Research

Overview

This dataset contains video shots for three different classes: tigers, sourced from nature documentaries, horses and dogs, sourced from the YouTube-Objects dataset. Each shot contains at least one instance of the corresponding class. We annotated each frame independently with the behavior performed by the class instance, such as. walk, run, and turn head (if multiple instances are visible, we annotated the behavior of the closest to the camera). For a subset of the horse and tiger videos, we also manually annotated the 2D location of 19 landmarks in each frame (e.g. left eye, neck, front left ankle, etc.). Unlike coarser annotations, such as bounding boxes, the landmarks enable evaluating the alignment of objects with non-rigid parts with greater accuracy. We also provide foreground segmentation masks computed using the software by Papazoglou and Ferrari. For more details, see the included README.

A few examples of the labelled behaviors

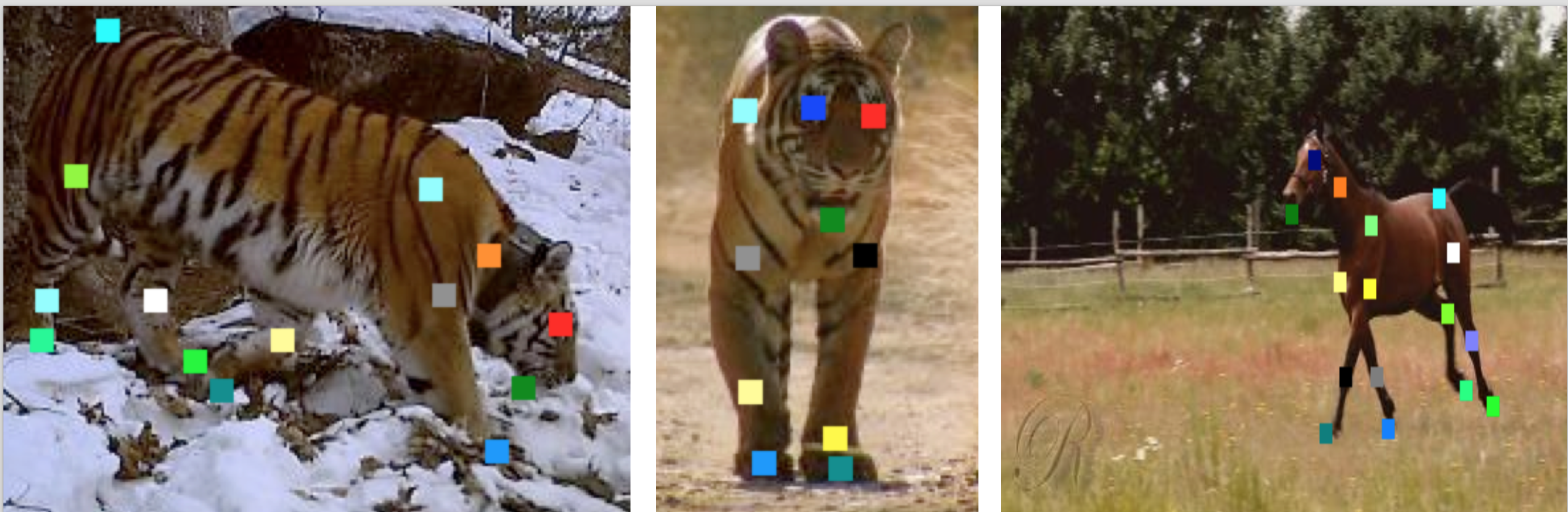

A few examples of the annotated landmarks

Downloads: Version 2.0

Downloads: Version 2.0

This version contains all the videos, the behavior labels, the landmarks, and the segmentation masks for all three object classes (dog, horse, tiger). We release it together with our IJCV 2016 paper.

Downloads: Version 1.0 (no horse class, no landmarks)

| Filename | Description | Release Date | Size |

| README.txt | Description of contents | 25 June 2015 | 7.2 KB |

| tiger.tar.gz | Tiger video shots | 02 June 2015 | 3331.0 KB |

| dog.tar.gz | Dog video shots | 02 June 2015 | 1570579.6 KB |

| ranges.tar.gz | File ranges | 02 June 2015 | 14.9 KB |

| behaviors.tar.gz | Behavior labels | 02 June 2015 | 111.5 KB |

| tigerSeg.tar.gz | Tiger segmentations | 02 June 2015 | 46824.6 KB |

| dogSeg.tar.gz | Dog segmentations | 02 June 2015 | 12578.9 KB |

This version contains the videos, the behavior labels, and the segmentation masks for tigers and dogs. It is a subset of version 2.0. We release it together with our CVPR 2015 paper.

Citations

We release this dataset together with our CVPR ’15 paper on articulated motion discovery and our IJCV ’16 paper on discovery and spatial alignment of behaviors (see our project page here). If you use this dataset for your research, please cite:

@INPROCEEDINGS{delpero15cvpr,

author = {Del Pero, L. and Ricco, S. and Sukthankar, R. and Ferrari, V.},

title = {Articulated motion discovery using pairs of trajectories},

booktitle = {Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2015}

}

@JOURNAL{delpero16ijcv,

author = {Del Pero, L. and Ricco, S. and Sukthankar, R. and Ferrari, V.},

title = {Behavior Discovery and Alignment of Articulated Object Classes from Unstructured Video},

journal = {International Journal of Computer Vision (IJCV)},

year = {2016}

}

Important Notice

These videos were downloaded from the internet, and may subject to copyright. We don’t own the copyright of the videos and only provide them for non-commercial research purposes.

Acknowledgements

This work was partly funded by a Google Faculty Award, and by ERC Grant “Visual Culture for Image Understanding”. We thank Anestis Papazoglou for helping with the data collection.